Artificial Intelligence - Modeling and Evaluation

Overview

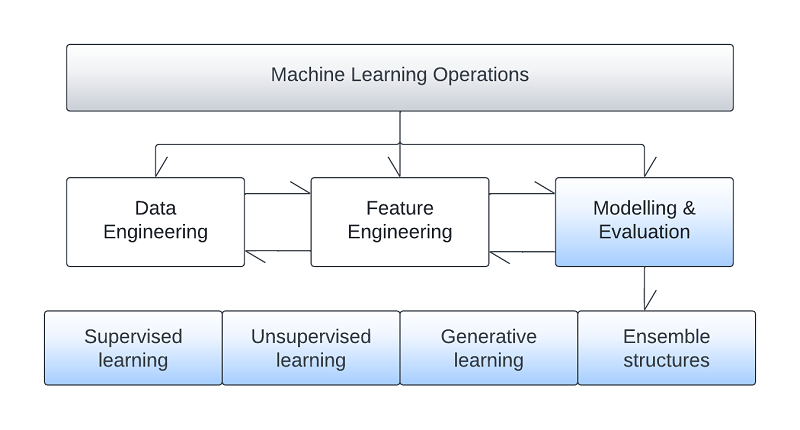

During the Modelling and Evaluation phase of the AI pipeline a multitude of AI cores can be used to implement the AI business case at hand. The list of major genres is an ever evolving one and different taxonomy attempts have been made that differ depending on the perspective used to categorise the algorithms. Throughout our use case page, we explore three major AI genres depending on their learning objectives, supervised, unsupervised and generative. We also present architectures that combine multiple AI cores known as ensembles.

Supervised learning

An AI whose learning involves historical knowledge of outcomes through input and target variables that map business objectives belongs to the supervised learning genre. Essentially, before training the AI cores, there exists a labelled dataset we can use to ask the AI to optimise decision performance based on that prior knowledge. The list of AI cores in this learning genre includes Neural Networks, Deep Neural Networks, as well as simpler algorithms such as polynomial or logistic regressions, decision trees and their derivatives and statistical techniques such as Naïve Bayes.

Unsupervised learning

In unsupervised learning we have no historical knowledge available concerning the underlying target variable that maps business objectives. What is available, is a dataset that historically models the general behaviour of the system within an ecosystem. In this case, the AI discover patterns within the data that can be used for decision making in the future. We can find numerous AI methodologies in this genre, for example Deep neural networks can be used in all types of unsupervised learning, while other techniques such as OPTICS, k-means, or hierarchical clustering are more orientated to specific tasks. As unsupervised learning accumulates inference, it can act a steppingstone for early supervised learning, a genre that is known as semi-supervised learning.

Generative learning

Generative AI is a combination of deep learning structures often driven by deep reinforcement learning. The prominent deep learning structure is the transformer that can analyse big data sequences and discover pattern sequences within the data. In the field of NLP Deep Reinforcement Learning is a combination of unstructured deep learning and reinforcement learning based on control theory. The latter is divided in two main categories, Markov processes and Evolutionary processes. The main concept is that the AI system is left to explore a digital twin of the problem space and is only provided with positive or negative feedback depending on its decisions. The AI gradually calibrates its behaviour so that its decisions maximise the rewards it collects.

Ensemble structures

A collection of AI cores working together and contributing their decisions to each other to solve a problem is called an ensemble structure. Ensembles, tend to produce better results than unitary AI algorithms especially if there is enough diversification within the structure. Architectures include collective algorithms such as xgboost, that acts as a collection of the same algorithm, in this instance decision trees, replicated many times, in this case known as random forests. They can also be proprietary collections working under a governance structure, a methodology that is called stacking. The governance structure itself can be as simple as a voting system or as complex as an AI overseeing all AI cores. Other techniques that can combine models to ensembles include bagging, boosting, Bayesian model averaging, and bucketing.

Learning optimisations

Training, validating, and testing is a step that is implemented after choosing the AI core. A combined strategy related to these three phases of learning is defined. The output of the process is an optimized AI core that delivers a good tradeoff between variance and bias. The data is divided into appropriate subsets for training and testing as well as a decision is made on stoppage training criteria, hyperparameter optimisation and learning metrics to use to evaluate the AI cores. To prevent biasing during making these decisions, part of the data is completely left unknown to the team. Several issues may arise during the process with overfitting and underfitting being the most prominent ones. The process of AI learning can be time consuming, costly and a very delicate balance of choices.