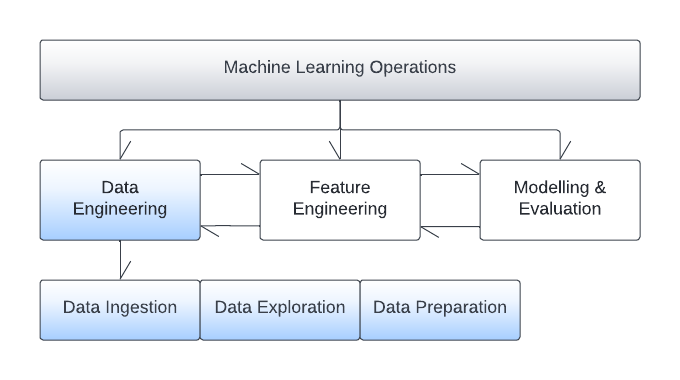

Artificial Intelligence - Data Engineering

Overview

Data engineering is the natural first step after business scope and expectations definition from an AI solution. One of the data strategy deliverables, during the business understanding phase, is the minimum data schema required to model the problem at hand. Given this schema, a data ingestion strategy needs to be implemented that will source the appropriate data from its various original locations. An exploration of the data space follows so that data scientists can investigate some base dataset characteristics such as distributions and quality. Following that, the data goes through a thorough data preparation process to ensure the delivery of a high-quality dataset. During this phase all the appropriate pipelines are built to clean and prepare the data for the next phases of the AI pipeline.

Data ingestion

These days companies focus on gathering large volumes of both structured and unstructured data. There are many sources of data including databases, APIs, sites, social media, IoT sensors, blogs, emails and more. During this data engineering step, appropriate scripts and automation process are implemented to gather the data from all the disparate sources and store it in a single data store. LSEG D&A already provides a single source of a multitude of industry datasets and APIs allowing to quickly tap into this rich ecosystem of AI ready structured and unstructured data. Such consistency is the result of the complex preprocessing that our products have already applied to various disparate datasets.

Data Exploration

Once the data is gathered in a central storage area, often called the data lake, an initial exploration will lead to certain conclusions about the quality and other important data characteristics such as min-max statistics, data types and more. Data distributions are analysed within the parameter space and data consistency and clarity is explored. Statistical distribution tests, such as testing for normality, are usually performed in the form of hypothesis testing on the relevant features.

During this phase, the AI data engine will also reveal any noise that the datasets might have, and strategies will be formulated towards solving or smoothing such inconsistencies.

Data preparation

After initial data exploration, initial data cleansing and synchronization methodologies can be applied. There are a multitude of techniques that can be used to enhance the quality of the available dataset and prepare it for the next phase of the Artificial Intelligence pipeline - the feature engineering phase. The main techniques that can be applied aim to cleanse, transform, combine, or synchronise the raw dataset. In the context of data cleansing many methodologies can be applied aiming to tackle problems such as missing values, outliers, null values, whitespaces, or special characters and more.

Data transformations such as constructing or deconstructing the data, applying aesthetic transformations or structural ones can be applied to improve the quality and data predictive power. Such transformations can sometimes be an expensive process. For example, a decimal column could be aggregated to periodic averages, which would be a constructive transformation. Deleting a column is an example of a destructive and structural transformation. Renaming a column, is an aesthetic transformation whereas combining two columns to one, would be a constructive and structural transformation. Other types of transformations include encryption, anonymisation, indexing, ordering, typecasting and more. Finally, combining and synchronisation is the process of merging different data sources in a single dataset to homogenise significant features. Oftentimes, when combining different data sources, discrepancies can be generated as there can exist differences in timestamps or other attribute aggregations between the sources. The purpose of this engineering step is to smooth out such discrepancies and present a homogenous dataset for the next AI pipeline phase.