Purpose

This tutorial is the fourth in a series on Tick History data inside Google BigQuery.

The preceding tutorial explained how to perform analytics on data.

For your convenience, a set of sample queries is available in a downloadable file.

Optimising SQL queries: an example

As we saw in the previous tutorial, our code might benefit from some tuning.

In the last example the passing of parameters was a bit awkward.

But optimization can also be applied to increase performance and reduce costs, as was hinted at when we saw the quote and trade analytics.

If you studied the code closely, you might have noticed that the Quote Statistics query was not written in an optimal manner: the same maximum, minimum and average values were calculated in different places, thus duplicating some of the effort. Writing our queries in a different way can reduce the server load by avoiding such inefficiencies, and thus deliver results even faster.

As an example, here is a second version of the quote analytics, which avoids calculation duplication:

#StandardSQL

WITH

RAWSTATS AS(

SELECT

RIC,

COUNT(RIC) AS QuotesCount,

MIN(Bid_Price) AS MinBid,

MAX(Bid_Price) AS MaxBid,

MIN(Ask_Price) AS MinAsk,

MAX(Ask_Price) AS MaxAsk,

AVG(Bid_Price) AS AvgBid,

AVG(Ask_Price) AS AvgAsk,

AVG(Ask_Price-Bid_Price) AS AverageSpread,

AVG(SAFE_DIVIDE(100*(Ask_Price-Bid_Price),(Ask_Price+Bid_Price)/2)) AS AverageSpreadPercent

FROM

`dbd-sdlc-prod.LSE_NORMALISED.LSE_NORMALISED`

WHERE

RIC LIKE "%.L%"

AND (Date_Time BETWEEN TIMESTAMP('2019-09-04 00:00:00.000000') AND

TIMESTAMP('2019-09-04 23:59:59.999999'))

AND Type="Quote"

AND ((Bid_Price IS NOT NULL AND Bid_Size IS NOT NULL) OR

(Ask_Price IS NOT NULL AND Ask_Size IS NOT NULL))

AND NOT (Qualifiers LIKE 'M[IMB_SIDE]')

AND NOT (Qualifiers LIKE 'M[ASK_TONE]')

AND NOT (Qualifiers LIKE 'M[BID_TONE]')

AND NOT (Qualifiers LIKE '[BID_TONE];[ASK_TONE]')

AND NOT (Qualifiers LIKE 'A[BID_TONE];A[ASK_TONE]')

GROUP BY

RIC

)

SELECT

RIC,

QuotesCount,

MinBid,

MaxBid,

MinAsk,

MaxAsk,

ROUND(SAFE_DIVIDE(100*(MaxBid-MinBid),AvgBid),3) AS DeltaBidPercent,

ROUND(SAFE_DIVIDE(100*(MaxAsk-MinAsk),AvgAsk),3) AS DeltaAskPercent,

ROUND(AvgBid,3) AS AvgBid,

ROUND(AvgAsk,3) AS AvgAsk,

ROUND(AverageSpread,5) AS AverageSpread,

ROUND(AverageSpreadPercent,5) AS AverageSpreadPercent

FROM

RAWSTATS

ORDER BY

QuotesCount DESC

Even though this second version is longer than the previous one, it runs approximately 8% faster.

The trade analytics sample we saw previously has the same flaw. After optimisation it also benefits from a similar performance increase.

This introduced us to the topic of query optimization.

Optimising SQL queries: best practices

There are some general rules you can apply to ensure your queries run in an efficient manner, deliver results quickly, and cost as little as possible:

1. Minimize the amount of data you query and analyse

- Query only the columns you need (avoid using SELECT *).

- Restrict the date range.

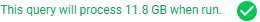

Note: when working in the console, if the query syntax is correct, the size of the data set that will be processed is displayed beneath the editor window:

2. Avoid repeatedly transforming data via your SQL queries

This was illustrated by the two versions of the query we just saw.

3. Order operations to maximize performance

- Filter data before sorting it.

- Prune data as much as possible before performing complex operations on it.

4. Use a LIMIT clause with large sorts

Following these 4 simple rules will help you optimize your queries. For more detailed information and tips, refer to the following Google documentation:

UDFs and performance

With a UDF (User Defined Function) you can create a function using an SQL expression or JavaScript code, which can even be extended by importing external libraries.

UDFs can either be persistent or temporary. Persistent UDFs can be reused across multiple queries, whereas temporary UDFs can only be used in a single query.

What is the impact of a UDF ?

Let us consider a simple mean quote price calculation. This can be calculated directly in a SQL query:

ROUND((Bid_price+Ask_Price)/2,4) AS Mid_Price,

This can also be implemented in a UDF. Here is the same calculation in a UDF that uses SQL code:

CREATE TEMPORARY FUNCTION

Mid_Price(bid FLOAT64, ask FLOAT64)

RETURNS FLOAT64

AS (ROUND((bid+ask)/2,4));

Finally, here is the same calculation in a UDF that uses JavaScript code:

CREATE TEMPORARY FUNCTION

Mid_Price(bid FLOAT64, ask FLOAT64)

RETURNS FLOAT64

LANGUAGE js AS "return Math.round(((bid+ask)/2)*10000)/10000;";

How do these 3 versions compare from a pure performance point of view ?

- The run time of the pure SQL query, and that of the SQL UDF, are similar.

- Both SQL versions (with and without UDF) run approximately 45% faster than the JavaScript UDF.

This shows that creating a UDF does not have a performance impact if coded in SQL, but using JavaScript code will slow it down significantly. It is therefore best to limit JavaScript to cases that cannot be implemented using SQL.

For more information on UDFs, their implementation, best practices, and limits, refer to the Google BigQuery UDF documentation and best practices.

Samples list

Using the techniques we have just seen, you can build analytics based on quotes, trades or market depth data, summarise data in bars, calculate benchmarks, compare execution prices, find interesting instruments by volume or price change, and much more.

The downloadable sample set gives you all the SQL query examples we have seen, including some optimised versions:

Tick examples:

- Number of ticks - Count all ticks (quotes, trades, etc.)

Quote examples:

- Quotes - Retrieve quotes, first sample in the Data Retrieval tutorial

- Quotes Persistent - Retrieve quotes, persist bid and ask prices to fill gaps

- Quote Mid Price SQL - Mid price calculation using SQL, discussed above

- Quote Mid Price UDF JS - Mid price calculation using a JavaScript UDF, discussed above

- Quote Mid Price UDF SQL - Mid price calculation using a SQL UDF, discussed above

- Quote Statistics - First sample in the Data Analytics tutorial

- Quote Statistics v2 - Optimised version of Quote statistics, discussed above

Trade examples:

- Trades - Retrieve trades, second sample in the Data Retrieval tutorial

- Trade Statistics - Second sample in the Data Analytics tutorial

- Trade Statistics v2 - Optimised version of Trade Statistics

- Bars - Create trade bars

- Bars v2 - Optimised version of Bars

- Execution Comparison - Compare a trade you made with the market, fourth sample in the Data Analytics tutorial

- Execution Comparison v2 - Optimised version of Execution comparison

Market depth examples:

- Market Depth 3 levels - Retrieve 3 levels of market depth, third sample in the Data Retrieval tutorial

- Market Depth 5 levels - Retrieve 5 levels of market depth

- Market Depth Barycenters - Calculate the market depth barycentre, third sample in the Data Analytics tutorial

Conclusion

As we have seen, it is very easy to access LSEG Tick History data and analyse Gigabytes of data it in a matter of seconds using Google BigQuery. As the requests rely entirely on SQL, you don’t need any other skills to create powerful analytics in a very short time.

If you would like to dig further, here is a summary of all the reference links mentioned in this tutorial set:

- Tick History in Google BigQuery webinar

- Tick History in Google BigQuery article

- Downloadable samples file

- Google BigQuery pages

- Google BigQuery documentation

- Google Cloud Platform (GCP) console documentation

- Google Cloud Platform (GCP) console GUI

- BigQuery best practices for query performance

- Google cost optimization best practices for BigQuery

- Google BigQuery UDF documentation and best practices