| Last update | Dec 2023 |

| Environment | Windows |

| Language | C# |

| Compilers | Microsoft Visual Studio |

| Prerequisites | DSS login, internet access, having done the previous tutorial |

| Source code | Download .Net SDK Tutorials Code |

Tutorial purpose

This is the fifth tutorial in a series of .Net SDK tutorials. It is assumed that the reader has acquired the knowledge delivered in the previous tutorials before following this one.

This tutorial moves away from the scheduled extractions which mimic what can be done using the GUI control, and covers a new topic: On Demand extractions, using as example an intraday summaries (bar) extraction.

In this tutorial we therefore use the simplified high level API calls for On Demand extractions. We also include some file input and output, and some error handling:

- Populate a large instrument list array read from a CSV file, outside of the DSS server. Errors encountered when parsing the input file are logged to an error file.

- Create an array of data field names, outside of the DSS server.

- Optionally set instrument validation parameters, for instance to allow historical instruments.

- Create and run an on demand data extraction on the DSS server, wait for it to complete.

- Retrieve and display the data and extraction notes. We also write them to separate files, and analyse the notes file to find issues.

The file input and output do not illustrate additional LSEG Tick History REST API functionality, but serve to put it in the context of slightly more productized code.

Table of contents

- Getting ready

- Understanding the On Demand calls

- Important warning on data usage

- Understanding the code

- Code run results

- Conclusions

Getting ready

Opening the solution

The code installation was done in Tutorial 1.

Opening the solution is similar to what was done in Tutorial 1:

- Navigate to the \API TH REST\Tutorial 5\Learning folder.

- Double click on the solution file restful_api_more_advanced_topics.sln to open it in Microsoft Visual Studio

Referencing the DSS SDK

Important: this must be done for every single tutorial, for both the learning and refactored versions.

This was explained in the tutorial 2; please refer to it for instructions.

For this and the preceding tutorial an additional library must be referenced: SharpZipLib from ICSharpCode. The DLL is delivered with the code samples, but if you prefer you can also use NuGet.

Viewing the C# code

In Microsoft Visual Studio, in the Solution Explorer, double click on Program.cs and on DssClient.cs to display both file contents. Each file will be displayed in a separate tab.

Setting the user account

Before running the code, you must replace YourUserId with your DSS user name, and YourPassword with your DSS password, in these 2 lines of Program.cs::

private static string dssUserName = "YourUserId";

private static string dssUserPassword = "YourPassword";

Important reminder: this will have to be done again for every single tutorial, for both the learning and refactored versions.

Failure to do so will result in an error at run time (see Tutorial 1 for more details).

Understanding the On Demand calls

The On Demand high level calls we use in this tutorial depart from what was done in the previous tutorials.

As stated in the Tutorial Introduction, in the note on programming approaches, there are 2 approaches:

- Create (and modify when required) an instrument list, report template and extraction schedule, all stored on the DSS server, and retrieve your data once the extraction has completed. This mimics what you can do with the web GUI. This approach was used in tutorials 3 and 4.

- Using On Demand advanced API calls, it is also possible to do on the fly data requests without storing an instrument list, report template and extraction schedule on the DSS server. It is a more direct and dynamic way of doing things. In this case the instrument list, report template and extraction schedule are ephemeral, and cannot be seen in the DSS web GUI. This approach is used in this tutorial.

The choice is yours, and will depend on your use case and personal preferences.

The second approach we explore now has two consequences:

- We cannot see any instrument list, report template, schedule or result file using the web GUI.

- Cleanup on the DSS server is not required, as there is nothing to delete.

Once an extraction has completed, the results returned by the API contain:

- The extracted data itself, in compressed format.

- The extraction notes. This contains details of the extraction.

These results are returned by the server to our program, for further treatment. That could be as simple as saving the file to disk, or be more involved and imply on the fly treatment. Note that the extraction notes are pure text, whereas the data is gzipped CSV. This difference must be taken into account when treating the files.

Read on to understand how this is done.

Important warning on data usage

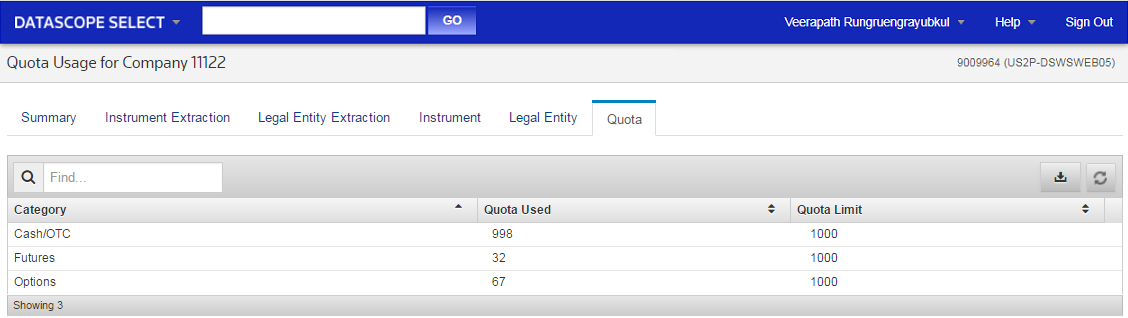

Tick history data request quota

DSS accounts have a quota of instruments that can be requested during a defined time period. The subscription fees are proportional to the size of the quota. If the quota is reached, excess fees will be due. That situation must be avoided !

Data usage can be displayed in the web GUI:

Contact your local account manager or sales specialist if you have queries on your account quota or usage.

Instrument list files delivered with the tutorials

The code associated with this tutorial can handle large instrument lists, read from a file.

Care must be exercised when running this tutorial, to avoid over consuming data.

For this reason, several files are delivered with the tutorials code:

- DSS_API_1942_input_file.csv

- DSS_API_86_input_file.csv

- DSS_API_10_input_file.csv

The first one contains slightly less than 2000 valid instrument identifiers, the second one 86, the last one only contains 10.

The text and illustrations that follow use the larger file. To avoid consuming too much data, we recommend you use the smaller file for your own tests, which will deliver similar results.

Instrument expansion

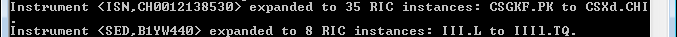

The medium and large instrument list files do not seem to contain so many instruments, but they contain chains, ISINs and Sedols. When the extraction request is processed by the server, the chains will be expanded to their constituents, and the ISINs will be expanded to the list of all corresponding RICs. The expansion mechanism therefore increases the number of instruments in the request, in some cases quite dramatically.

Understanding the code

We shall only describe what is new versus the previous tutorials.

Note: contrary to previous tutorials, this one does not have a refactored version.

DssClient.cs

This is the same as the refactored version of Tutorial 4, except for the leading comment.

Program.cs

At the top of the code we see the using directives. As we are using a whole set of API calls, several using directives are required to give us access to the types of the DSS API namespace, so that we do not have to qualify the full namespace each time we use them. As in the previous tutorials, these are followed by a using directive referring to the namespace of our code. In addition, we have a directive for the SharpZipLib library:

using ICSharpCode.SharpZipLib.GZip;

using DataScope.Select.Api.Extractions;

using DataScope.Select.Api.Content;

using DataScope.Select.Api.Extractions.ReportTemplates;

using DataScope.Select.Api.Extractions.ExtractionRequests;

using DataScope.Select.Api.Extractions.SubjectLists;

using DataScope.Select.Api.Core;

using DssRestfulApiTutorials;

Member declarations

We add a whole set of declarations. The first is related to where we want to download the data from. It is now possible to choose to download the data file either from the LSEG Tick History servers, or directly from the AWS (Amazon Web Services) cloud. It is recommended to use AWS as it delivers faster downloads:

private static bool awsDownload = true;

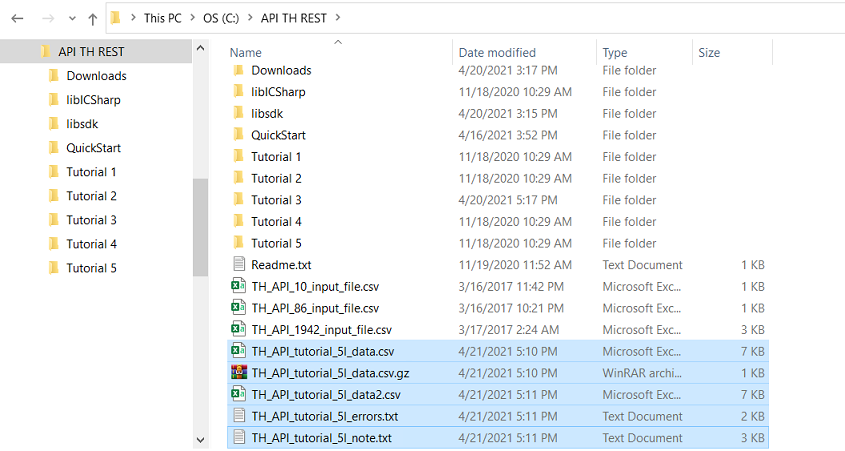

The following define the input and output files and their locations. As output we will create a data file and a separate error log file. As input we have an instrument identifier list:

private static string outputDirectory = "C:\\API TH REST\\";

private static string gzipDataOutputFile = outputDirectory + "TH_API_tutorial_5l_data.csv.gz";

private static string csvDataOutputFile = outputDirectory + "TH_API_tutorial_5l_data.csv";

private static string csvDataOutputFile2 = outputDirectory + "TH_API_tutorial_5l_data2.csv";

private static string noteOutputFile = outputDirectory + "TH_API_tutorial_5l_note.txt";

private static string errorOutputFile = outputDirectory + "TH_API_tutorial_5l_errors.txt";

private static string inputDirectory = outputDirectory;

private static string instrumentIdentifiersInputFile = inputDirectory + "TH_API_10_input_file.csv";

The default directories and file names above can be used as is if you installed the tutorials code as described in Tutorial 1, using the directory structure defined in the Quick Start. If you are using a different folder structure, change the declarations accordingly.

The input file is a CSV file. 3 samples are included with the code, but you can also make your own:

- File format: 1 instrument per line.

- Line format: <identifier type>, <code>, <optional comment>

Example lines, first two are for a Cusip (CSP) and a RIC:

CSP,31352JRV1

RIC,ALVG.DE

CHR,0#.FCHI,CAC40

CIN,G93882192,Vodaphone

COM,001179594,Carrefour

ISN,CH0012138530,CS

SED,B1YW440,3i Group

VAL,24476758,UBS

WPK,A11Q05,Elumeo

The sample input files contains lines with correct line format syntax. They also include lines that are comments, empty or badly structured, to test the error handling included in the code.

To avoid consuming too much data, we recommend you use small files for your own tests. For more information, refer to the previous section: Important warning on data usage.

A final parameter allows you to limit the number of lines of data that will be output to screen:

private static int maxDataLines = 1000000; //To set a limit to the output data size

Initial file and directory management

After authenticating, we start with some basic input checks.

First we verify if the input file exists. If it does not, we display an error and exit the program:

if (!File.Exists(instrumentIdentifiersInputFile))

{

DebugPrintAndWaitForEnter("FATAL: cannot access " + instrumentIdentifiersInputFile +

"\nCheck if file and directory exist.");

return; //Exit main program

}

Then we verify if the output directory exists. If it does not, we display an error and exit the program:

if (!Directory.Exists(outputDirectory))

{

DebugPrintAndWaitForEnter("FATAL: cannot access directory " + outputDirectory +

"\nCheck if it exists.");

return; //Exit main program

}

Then we clear the output files of any content left over from a previous run, and create them if they do not exist. Here is the code for the first file, the others are done in a similar manner:

StreamWriter sw = new StreamWriter(dataOutputFile, false);

sw.Close();

Console.WriteLine("Cleared data output file " + dataOutputFile + "\n");

The second parameter of StreamWriter defines if content is to be appended to the file. By setting a value of false we clear the contents if the file already exists.

Next, we open the error output file and initialise it with a first message:

//Initialise the error output file:

StreamWriter swErr = new StreamWriter(errorOutputFile, true);

swErr.WriteLine("INFO: list of errors found in input file: " + instrumentIdentifiersInputFile + ":");

We also open the input file:

StreamReader sr = new StreamReader(instrumentIdentifiersInputFile);

Creating the instrument list by reading an input file

Instead of creating an array of defined length like in the previous tutorial, we shall create a list for the instrument identifiers.

The reason is that, at this stage, we do not know how many instrument identifiers we will have, because:

- We don't know how many instruments are in the file.

- We shall validate the file entries, and only keep the good ones.

It is not possible to define an array of undefined size, and it would be a waste to define a huge one and then resize it.

So we define a list of undefined size, which we shall fill from the file. Once all instrument identifiers have been added to the list, we convert it to an array, as that is what the On Demand extraction API call requires.

The first step is to create the empty instrument identifiers list:

List<InstrumentIdentifier> instrumentIdentifiersList = new List<InstrumentIdentifier> ();

Then we populate the list, by reading one line at a time, from the input file. First we initialize some variables:

int fileLineNumber = 0;

string fileLine = string.Empty;

string identifierTypeString = string.Empty;

string identifierCodeString = string.Empty;

IdentifierType identifierType;

int i = 0;

Then we read all lines until we get to the end of the file, parse the data, and add the validated data to the list:

bool endOfFile = false;

while (!endOfFile)

{

//Read one line of the file, test if end of file:

fileLine = sr.ReadLine();

endOfFile = (fileLine == null);

if (!endOfFile)

{

fileLineNumber++;

try

{

//Parse the file line to extract the comma separated instrument type and code:

string[] splitLine = fileLine.Split(new char[] { ',' });

identifierTypeString = splitLine[0];

identifierCodeString = splitLine[1];

//Add only validated instrument identifiers into our list.

if (identifierTypeString != string.Empty)

{

if (identifierCodeString != string.Empty)

{

//DSS can handle many types, here we only handle a subset:

switch (identifierTypeString)

{

case "CHR": identifierType = IdentifierType.ChainRIC; break;

case "CIN": identifierType = IdentifierType.Cin; break;

case "COM": identifierType = IdentifierType.CommonCode; break;

case "CSP": identifierType = IdentifierType.Cusip; break;

case "ISN": identifierType = IdentifierType.Isin; break;

case "RIC": identifierType = IdentifierType.Ric; break;

case "SED": identifierType = IdentifierType.Sedol; break;

case "VAL": identifierType = IdentifierType.Valoren; break;

case "WPK": identifierType = IdentifierType.Wertpapier; break;

default:

identifierType = IdentifierType.NONE;

DebugPrintAndWriteToFile("ERROR: line " + fileLineNumber +

": unknown identifier type: " + identifierTypeString, swErr);

break;

}

if (identifierType != IdentifierType.NONE)

{

instrumentIdentifiersList.Add(new InstrumentIdentifier

{

IdentifierType = identifierType,

Identifier = identifierCodeString

});

Console.WriteLine("Line " + fileLineNumber + ": " + identifierTypeString +

" " + identifierCodeString + " loaded into array [" + i + "]");

i++;

}

}

Note: for more information on identifier types, refer to Tutorial 3: Creating an instrument list.

The code that follows is for error logging. Each error is displayed on screen and written to file, using a helper method:

//Error handling messages grouped here:

else

{

DebugPrintAndWriteToFile("ERROR: line " + fileLineNumber +

": missing identifier code in line: " + fileLine, swErr);

}

}

else

{

DebugPrintAndWriteToFile("ERROR: line " + fileLineNumber +

": missing identifier type in line: " + fileLine, swErr);

}

}

catch

{

DebugPrintAndWriteToFile("ERROR: line " + fileLineNumber +

": bad line format: " + fileLine, swErr);

}

}

else

{

if (fileLineNumber == 0)

{ DebugPrintAndWriteToFile("ERROR: empty file: " + instrumentIdentifiersInputFile, swErr); }

}

} //End of while loop

The helper method which displays errors and writes them to file is defined at the end of the main code:

static void DebugPrintAndWriteToFile(string messageToPrintAndWriteToFile, StreamWriter sw)

{

Console.WriteLine(messageToPrintAndWriteToFile);

sw.WriteLine(messageToPrintAndWriteToFile);

}

Extract of the output for successfully added instrument identifiers (using the largest sample input file):

Extract of the output for invalid lines in the largest input file:

The error file contains all the ERROR messages that appear on screen, in the same format.

Back to the main code, the next step is to close the input file and the error output file, and exit if we do not have at least one valid entry:

sr.Close();

int validIdentifiersCount = i;

if (validIdentifiersCount == 0)

{

DebugPrintAndWriteToFile("\nFATAL: program exit due to no valid identifiers in the list.", swErr);

DebugPrintAndWaitForEnter("");

swErr.Close();

return; //Exit main program

}

swErr.Close();

Console.WriteLine("\n" + validIdentifiersCount +

" valid instruments were loaded into an array, outside of DSS,\n" +

"for use in the extraction.");

Result:

Now we convert our instrument identifiers list to an array, as that is what the On Demand extraction API call requires:

//Convert the instrument identifiers list to an array:

InstrumentIdentifier[] instrumentIdentifiers = instrumentIdentifiersList.ToArray();

Creating the field name array

To create the field name array we proceed just like in the previous tutorials, calling the helper method we created in Tutorial 3:

string[] requestedFieldNames = CreateRequestedFieldNames();

We will use this array when we define the extraction.

No report template or schedule creation

We do not create a report template, because the On Demand extraction calls implicitly define it.

We do not create a schedule, because an On Demand extraction is on the fly.

If you require recurring extractions, you can either use the calls we saw in the previous tutorials, or programmatically recurrently run an On Demand extraction.

Creating an On Demand extraction

First we define the On Demand request conditions.

In this example we want Intraday Summaries (bar) data, so we declare a new TickHistoryIntradaySummariesCondition. It defines the bar interval (in this case one hour), the historical date and time range for the data we want, and a few other parameters:

TickHistoryIntradaySummariesCondition condition = new TickHistoryIntradaySummariesCondition

{

SummaryInterval = TickHistorySummaryInterval.OneHour,

DaysAgo = null,

MessageTimeStampIn = TickHistoryTimeOptions.GmtUtc,

QueryStartDate = new DateTimeOffset(2016, 09, 29, 0, 0, 0, TimeSpan.FromHours(0)),

QueryEndDate = new DateTimeOffset(2016, 09, 30, 0, 0, 0, TimeSpan.FromHours(0)),

ReportDateRangeType = ReportDateRangeType.Range,

ExtractBy = TickHistoryExtractByMode.Ric,

SortBy = TickHistorySort.SingleByRic,

Preview = PreviewMode.None,

DisplaySourceRIC = true,

TimebarPersistence = true

};

If we were requesting a different type of data (like Time and Sales, market depth, or other), a few of the available parameters to define in the condition could be slightly different. Go to the API Reference Tree and select the appropriate extraction request value to see more details.

Important note: inactive and historical instruments might be rejected, like a matured bond or an instrument that changed name. As explained in the Tutorials Introduction under heading Instrument Validation, contrary to scheduled requests, On Demand requests do not take user preferences set in the GUI into account. So, when required, we must specify the instrument validation behavior in the code. There are many available options. In this example we only set 3 of them, and override any other user preferences:

InstrumentValidationOptions validationOptions = new InstrumentValidationOptions

{

AllowHistoricalInstruments = true,

AllowInactiveInstruments = true,

AllowOpenAccessInstruments = true

};

bool useUserPreferencesForValidationOptions = false;

The 2 parameters defined above are optional; if not set, simply remove them from the next call.

Next we define a new TickHistoryIntradaySummariesExtractionRequest, using as parameters the arrays we just defined for instrument identifiers, field names and conditions:

TickHistoryIntradaySummariesExtractionRequest extractionRequest =

new TickHistoryIntradaySummariesExtractionRequest

{

IdentifierList = InstrumentIdentifierList.Create(instrumentIdentifiers, validationOptions,

useUserPreferencesForValidationOptions),

ContentFieldNames = requestedFieldNames,

Condition = condition

};

The extraction request is specific to the type of data we want, just like the conditions.

For other types of data, the extraction request type would be different, the conditions might be slightly different, but the overall process is exactly the same. The Example Application (explained in the Quick Start) illustrates many more On Demand extractions.

Running an On Demand extraction

We first define an option for the request, to disable automatic decompression. This is because we want to download the compressed data, and, if required, decompress it using a dedicated package. We used SharpZipLib, other libraries to consider include zlib and DotNetLib. The result is more reliable and robust code that can handle large data sets:

//Do not automatically decompress data file content:

extractionsContext.Options.AutomaticDecompression = false;

To actually run the extraction we use the ExtractRaw call. It takes as parameter the extraction request we created. This API will only return when the extraction is complete, so there is no need for code to check for extraction completion.

RawExtractionResult extractionResult = extractionsContext.ExtractRaw(extractionRequest);

Retrieving the extracted data, writing it to file

Once the On Demand extraction has completed, the data and notes can be retrieved.

Let us start with the data. As data sets can be very large, the API implements a streaming mechanism to retrieve the data. Using streams means your program will not use huge amounts of memory, and data can be processed inline.

That said, on the fly processing is not recommended for large data sets. Instead, it is recommended to save the compressed data to file, and then to read, decompress and treat it from there.

The code sample illustrates several possibilities:

- Saving the data directly as a compressed file, on hard disk.

- Saving the data as a CSV file, for later usage (out of scope here).

- Reading and decompressing the data from the compressed file that was saved to hard disk.

- Reading and decompressing the data on the fly, as it is received from the server. This variant also saves the data as a second CSV file (identical to the one created by the other variant above).

It also shows how to download the data from AWS (the Amazon Web Service cloud), which is faster than the standard download.

On the topics of decompression and download, and AWS downloads, see the 2 advisories available in the documentation under the Alerts and Notices section.

To save the data in compressed format, the code is very simple; we just copy the result stream into a file.

If we want to download from AWS, we first set an additional request header, which we remove afterwards:

//Direct download from AWS ?

if (awsDownload) { extractionsContext.DefaultRequestHeaders.Add("x-direct-download", "true"); };

DssStreamResponse streamResponse = extractionsContext.GetReadStream(extractionResult);

using (FileStream fileStream = File.Create(dataOutputFile))

streamResponse.Stream.CopyTo(fileStream);

//Reset header after direct download from AWS ?

if (awsDownload) { extractionsContext.DefaultRequestHeaders.Remove("x-direct-download"); };

Console.WriteLine("Saved the compressed data file to disk:\n" + gzipDataOutputFile);

Alternatively we can decompress the data on the fly, and save the result in a CSV file, for later usage:

//Direct download from AWS ?

if (awsDownload) { extractionsContext.DefaultRequestHeaders.Add("x-direct-download", "true"); };

streamResponse = extractionsContext.GetReadStream(extractionResult);

using (GZipInputStream gzip = new GZipInputStream(streamResponse.Stream))

using (FileStream fileStream = File.Create(csvDataOutputFile))

gzip.CopyTo(fileStream);

//Reset header after direct download from AWS ?

if (awsDownload) { extractionsContext.DefaultRequestHeaders.Remove("x-direct-download"); };

Console.WriteLine("Saved the uncompressed data file to disk:\n" + csvDataOutputFile);

After saving the data to hard disk in compressed format, we can read and decompress it for further treatment. This is the recommended way to proceed, especially for large data sets. Here we decompress the data, and display it line by line on screen. The first line is the header line, which is the list of fields. The remaining lines contain the data.

int lineCount = 0;

string line = "";

Console.WriteLine("Read compressed data file\n" + gzipDataOutputFile + "\nfrom disk, and decompress contents:");

Console.WriteLine("==================================== DATA =====================================");

swErr = new StreamWriter(errorOutputFile, true);

using (FileStream fileStream = File.OpenRead(gzipDataOutputFile))

{

using (GZipInputStream gzip = new GZipInputStream(fileStream))

{

using (StreamReader reader = new StreamReader(gzip, Encoding.UTF8))

{

line = reader.ReadLine();

if (string.IsNullOrEmpty(line))

{

Console.WriteLine("WARNING: no data returned. Check your request dates.");

swErr.WriteLine("WARNING: no data returned. Check your request dates.");

}

else

//The first line is the list of field names:

Console.WriteLine(line);

lineCount++;

//The remaining lines are the data:

//Variant 1 (no limit on screen output): write all lines to console

//while ((line = reader.ReadLine()) != null)

//{

// Console.WriteLine(line);

// lineCount++;

//}

//Variant 2: write lines individually to console (with a limit on number of lines we output)

for (lineCount = 1; !reader.EndOfStream; lineCount++)

{

line = reader.ReadLine();

if (lineCount < maxDataLines) { Console.WriteLine(line); };

if (lineCount == maxDataLines)

{

Console.WriteLine("===============================================================================");

Console.WriteLine("Stop screen output now, we reached the max number of lines we set for display:\n" + maxDataLines);

Console.WriteLine("Counting the remaining lines, please be patient ...");

}

}

Console.WriteLine("Total line count for data read from disk: " + lineCount);

}

}

}

swErr.Close();

Inside the loop, the commented code variant 1 is shorter, but the second one is more appropriate for a demo.

The last variant is to decompress the data on the fly when receiving it from the server, without the intermediary step of saving it to disk. We immediately treat it, line by line. This method is not recommended for large data sets.

In this code we just display it on screen, and also save it to a CSV file:

lineCount = 0;

Console.WriteLine("Read data from DSS, decompress contents on the fly, save to csv and display:");

Console.WriteLine("==================================== DATA =====================================");

//Direct download from AWS ?

if (awsDownload) { extractionsContext.DefaultRequestHeaders.Add("x-direct-download", "true"); };

swErr = new StreamWriter(errorOutputFile, true);

sw = new StreamWriter(csvDataOutputFile2, false);

streamResponse = extractionsContext.GetReadStream(extractionResult);

{

using (GZipInputStream gzip = new GZipInputStream(streamResponse.Stream))

{

using (StreamReader reader = new StreamReader(gzip, Encoding.UTF8))

{

line = reader.ReadLine();

if (string.IsNullOrEmpty(line))

{

Console.WriteLine("WARNING: no data returned. Check your request dates.");

swErr.WriteLine("WARNING: no data returned. Check your request dates.");

}

else

{

//The first line is the list of field names:

sw.WriteLine(line);

Console.WriteLine(line);

lineCount++;

//The remaining lines are the data:

//Variant 1 (no limit on screen output): write all lines to console

//while ((line = reader.ReadLine()) != null)

//{

// Console.WriteLine(line);

// lineCount++;

//}

//Variant 2: write lines individually to console (with a limit on number of lines we output)

for (lineCount = 1; !reader.EndOfStream; lineCount++)

{

line = reader.ReadLine();

sw.WriteLine(line);

if (lineCount < maxDataLines) { Console.WriteLine(line); };

if (lineCount == maxDataLines)

{

Console.WriteLine("===============================================================================");

Console.WriteLine("Stop screen output now, we reached the max number of lines we set for display:\n" + maxDataLines);

Console.WriteLine("Counting the remaining lines, please be patient ...");

}

}

Console.WriteLine("Total line count for data read on the fly: " + lineCount);

}

}

}

}

//Reset header after direct download from AWS ?

if (awsDownload) { extractionsContext.DefaultRequestHeaders.Remove("x-direct-download"); };

sw.Close();

swErr.Close();

Console.WriteLine("\nSaved the uncompressed data file to disk:\n" + csvDataOutputFile2);

Here is a small extract of the first lines of the output, with the list of fields and the beginning of the data:

The resulting data might not contain all instruments, even though they were not rejected when we added them to the instrument list. This simply depends on the availability of data for the requested period.

Retrieving and analyzing the extraction notes, writing them to file

Note: AWS download only applies to data files, which explains why the following code makes no provision for it.

It is recommended to examine the extraction notes. They deliver useful information on the extraction, and might contain errors or warnings.

The notes are contained in a collection of strings that can easily be retrieved from the extraction result. The collection usually contains only one element, in other words all the notes are in a single string that contains new line characters. To analyze the notes contents line by line, the string must be split. This is illustrated in the sample code, which parses the notes file for the successful processing message, as well as errors, warnings, and permission issues:

sw = new StreamWriter(noteOutputFile, true);

Console.WriteLine("==================================== NOTES ====================================");

Boolean success = false;

string errorMsgs = "";

string warningMsgs = "";

string permissionMsgs = "";

foreach (String notes in extractionResult.Notes)

{

sw.WriteLine(notes);

Console.WriteLine(notes);

//The returned notes are in a single string. To analyse the contents line by line we split it:

string[] notesLines =

notes.Split(new string[] { "\n", "\r\n" }, StringSplitOptions.RemoveEmptyEntries);

foreach (string notesLine in notesLines)

{

success = success || (notesLine.Contains("Processing completed successfully"));

if (notesLine.Contains("ERROR")) { errorMsgs = errorMsgs + notesLine + "\n"; }

if (notesLine.Contains("WARNING")) { warningMsgs = warningMsgs + notesLine + "\n"; }

if (notesLine.Contains("row suppressed for lack of"))

{

permissionMsgs = permissionMsgs + notesLine + "\n";

}

}

}

sw.Close();

The results of the analysis are also output to file and display:

swErr = new StreamWriter(errorOutputFile, true);

if (success) { Console.WriteLine("SUCCESS: processing completed successfully.\n"); }

else { errorMsgs = "ERROR: processing did not complete successfully !\n" + errorMsgs; }

sw = new StreamWriter(errorOutputFile, true);

if (errorMsgs != "")

{

swErr.WriteLine("\nERROR messages in notes file:\n" + errorMsgs);

Console.WriteLine("ERROR messages:\n" + errorMsgs);

}

if (warningMsgs != "")

{

swErr.WriteLine("WARNING messages in notes file:\n" + warningMsgs);

Console.WriteLine("WARNING messages:\n" + warningMsgs);

}

if (permissionMsgs != "")

{

swErr.WriteLine("PERMISSION ISSUES messages in notes file:\n" + permissionMsgs);

Console.WriteLine("PERMISSION ISSUES messages:\n" + permissionMsgs);

}

swErr.Close();

This analysis is just an example of what one could do to extract some of the meaningful messages from the notes.

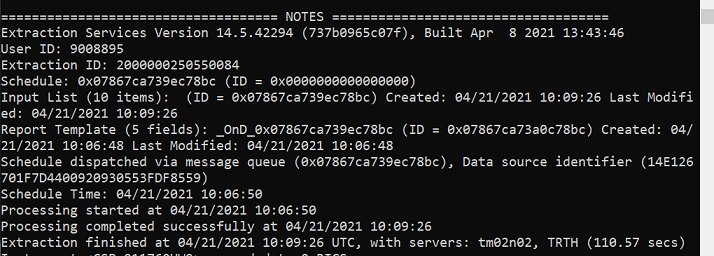

Here is the first part of the output, with generic information about the extraction:

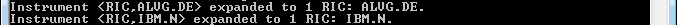

Note that in the above, the notes state that the input list has 199 items. That count is before Instrument expansion. The next part of the file delivers details of the expansions. Simple instruments expand to 1 RIC (i.e. they do not expand):

Instruments that are not valid for the requested range of dates will expand to 0 RICs (i.e. they are removed from the list):

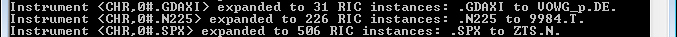

Chains will expand to multiple RICs:

Several instrument types, like ISINs, Cusips and Sedols, will also expand to multiple RICs:

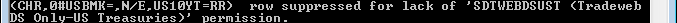

Data access is subject to permissioning. Depending on the entitlements of the account, some data might not be delivered. Applied permission restrictions are detailed per impacted instrument:

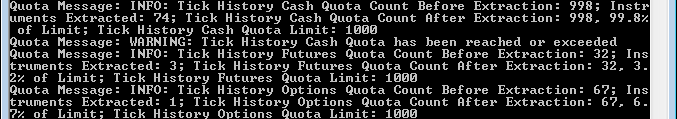

The amount of data that can be retrieved in a month is subject to a quota, which is also part of the account permissioning. Quota related messages are also delivered in the notes:

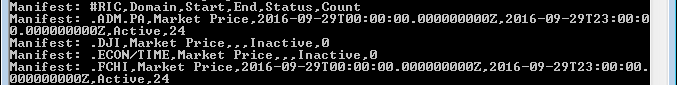

The last section of the notes gives a summary list of all the instruments.

This includes the status of each one, i.e. if it was active or not during the time range of the request.

The last element is the count of delivered data lines per instrument. Obviously, the data count is 0 for inactive instruments:

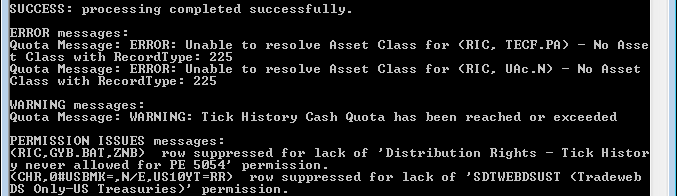

Our sample code analyzes all the notes, and delivers a summary. Here is the first part of that:

No cleaning up

As stated previously, cleanup on the DSS server is not required, as there is nothing to delete in this case.

Full code

The full code can be displayed by opening the appropriate solution file in Microsoft Visual Studio.

Summary

List of the main steps in the code:

- Authenticate by creating an extraction context.

- Check input file and output directory existence, clear output files.

- Create an array of financial instrument identifiers, populate it from a file. Manage and log errors.

- Create an array of field names.

- Create an extraction, after setting instrument validation options (if required, for instance for historical instruments).

- Run the extraction, wait for it to complete.

- Retrieve the extracted data, display it and write it to file.

- Retrieve the extraction notes, display them, analyze them and write them to file.

We do not create a report template or schedule, and there is no need to cleanup.

Code run results

Build and run

Don’t forget to reference the DSS SDK and the SharpZipLib, and to set your user account in Program.cs !

Successful run

After running the program with the smallest instrument list, and pressing the Enter key when prompted, here is the result:

Returned session token: _8ifND7n92k2ciXE9ASUf1ZKHXGsbevxPd82grFzkR9tm4tlTd-gWOQIcBdCgCUDk9kQoXC1SQoDjoS-McUQCfGIyNL9ri

54AGPo7Pvi_R7tdKGlYrwBRlaIDk9hvCdgnV5jFes6pkwnO84Evip8OMBiNlgfRavkxLLoxfLVvaxt9uhTNl48P6kcyNaX8mKYlX02DBaDvSavMnOiT2Lp

7GHf0mvNbG9F0PTFoycgFwBNDrui4BO6IZWoCQrV

Press Enter to continue

Cleared compressed data output file C:\API TH REST\TH_API_tutorial_5l_data.csv.gz

Cleared CSV data output file C:\API TH REST\TH_API_tutorial_5l_data.csv

Cleared second CSV data output file C:\API TH REST\TH_API_tutorial_5l_data2.csv

Cleared note output file C:\API TH REST\TH_API_tutorial_5l_note.txt

Cleared error output file C:\API TH REST\TH_API_tutorial_5l_errors.txt

ERROR: line 1: unknown identifier type: Identifier Type

Line 2: CSP 911760HW9 loaded into array [0]

Line 3: CSP 79548KHM3 loaded into array [1]

Line 4: CSP 194196BL4 loaded into array [2]

Line 5: CSP 911760JQ0 loaded into array [3]

Line 6: CSP 31282YAC3 loaded into array [4]

Line 7: CSP 31352DCR9 loaded into array [5]

Line 8: CSP 31352JRV1 loaded into array [6]

ERROR: line 9: bad line format: #RICs:

Line 10: RIC ALVG.DE loaded into array [7]

Line 11: RIC IBM.N loaded into array [8]

Line 12: RIC 0001.HK loaded into array [9]

ERROR: line 13: bad line format:

ERROR: line 14: bad line format: #Lines for error handling tests:

ERROR: line 15: unknown identifier type: Junk

ERROR: line 16: unknown identifier type: Junk

ERROR: line 17: missing identifier type in line: ,Junk

ERROR: line 18: missing identifier code in line: Junk,

ERROR: line 19: bad line format: Junk

ERROR: line 20: missing identifier type in line: ,

ERROR: line 21: missing identifier code in line: CSP,,

ERROR: line 22: missing identifier code in line: CSP,

ERROR: line 23: missing identifier type in line: ,CSP

ERROR: line 24: bad line format: CSP

ERROR: line 25: unknown identifier type: Junk

ERROR: line 26: unknown identifier type: Junk

ERROR: line 27: missing identifier code in line: RIC,,

ERROR: line 28: missing identifier code in line: RIC,

ERROR: line 29: missing identifier type in line: ,RIC

ERROR: line 30: bad line format: RIC

ERROR: line 31: unknown identifier type: Junk

ERROR: line 32: unknown identifier type: Junk

10 valid instruments were loaded into an array, outside of DSS,

for use in the extraction.

We also created an array of field names, outside of DSS.

Next we will launch a direct on demand intraday summary extraction,

using these 2 arrays.

Press Enter to continue

5:06:47 PM Please be patient and wait for the extraction to complete ...

5:09:36 PM Extraction complete ...

Press Enter to continue

Saved the compressed data file to disk:

C:\API TH REST\TH_API_tutorial_5l_data.csv.gz

Press Enter to continue

Saved the uncompressed data file to disk:

C:\API TH REST\TH_API_tutorial_5l_data.csv

Press Enter to continue

Read compressed data file

C:\API TH REST\TH_API_tutorial_5l_data.csv.gz

from disk, and decompress contents:

==================================== DATA =====================================

#RIC,Alias Underlying RIC,Domain,Date-Time,GMT Offset,Type,Open,High,Low,Last,Volume

0001.HK,,Market Price,2016-09-29T00:00:00.000000000Z,+8,Intraday 1Hour,,,,,

0001.HK,,Market Price,2016-09-29T01:00:00.000000000Z,+8,Intraday 1Hour,99.4,100.2,99.4,99.75,444500

0001.HK,,Market Price,2016-09-29T02:00:00.000000000Z,+8,Intraday 1Hour,99.7,99.9,99.4,99.45,449500

0001.HK,,Market Price,2016-09-29T03:00:00.000000000Z,+8,Intraday 1Hour,99.45,99.65,99.35,99.6,371000

0001.HK,,Market Price,2016-09-29T04:00:00.000000000Z,+8,Intraday 1Hour,,,,,

0001.HK,,Market Price,2016-09-29T05:00:00.000000000Z,+8,Intraday 1Hour,99.5,99.6,99.25,99.25,280500

0001.HK,,Market Price,2016-09-29T06:00:00.000000000Z,+8,Intraday 1Hour,99.3,99.5,99.3,99.4,204000

0001.HK,,Market Price,2016-09-29T07:00:00.000000000Z,+8,Intraday 1Hour,99.4,99.85,99.4,99.85,1448500

0001.HK,,Market Price,2016-09-29T08:00:00.000000000Z,+8,Intraday 1Hour,99.7,99.7,99.7,99.7,506500

0001.HK,,Market Price,2016-09-29T09:00:00.000000000Z,+8,Intraday 1Hour,,,,,

0001.HK,,Market Price,2016-09-29T10:00:00.000000000Z,+8,Intraday 1Hour,,,,,

0001.HK,,Market Price,2016-09-29T11:00:00.000000000Z,+8,Intraday 1Hour,,,,,

0001.HK,,Market Price,2016-09-29T12:00:00.000000000Z,+8,Intraday 1Hour,,,,,

0001.HK,,Market Price,2016-09-29T13:00:00.000000000Z,+8,Intraday 1Hour,,,,,

0001.HK,,Market Price,2016-09-29T14:00:00.000000000Z,+8,Intraday 1Hour,,,,,

0001.HK,,Market Price,2016-09-29T15:00:00.000000000Z,+8,Intraday 1Hour,,,,,

0001.HK,,Market Price,2016-09-29T16:00:00.000000000Z,+8,Intraday 1Hour,,,,,

0001.HK,,Market Price,2016-09-29T17:00:00.000000000Z,+8,Intraday 1Hour,,,,,

0001.HK,,Market Price,2016-09-29T18:00:00.000000000Z,+8,Intraday 1Hour,,,,,

0001.HK,,Market Price,2016-09-29T19:00:00.000000000Z,+8,Intraday 1Hour,,,,,

0001.HK,,Market Price,2016-09-29T20:00:00.000000000Z,+8,Intraday 1Hour,,,,,

0001.HK,,Market Price,2016-09-29T21:00:00.000000000Z,+8,Intraday 1Hour,,,,,

0001.HK,,Market Price,2016-09-29T22:00:00.000000000Z,+8,Intraday 1Hour,,,,,

0001.HK,,Market Price,2016-09-29T23:00:00.000000000Z,+8,Intraday 1Hour,,,,,

ALVG.DE,,Market Price,2016-09-29T00:00:00.000000000Z,+2,Intraday 1Hour,,,,,

ALVG.DE,,Market Price,2016-09-29T01:00:00.000000000Z,+2,Intraday 1Hour,,,,,

ALVG.DE,,Market Price,2016-09-29T02:00:00.000000000Z,+2,Intraday 1Hour,,,,,

ALVG.DE,,Market Price,2016-09-29T03:00:00.000000000Z,+2,Intraday 1Hour,,,,,

ALVG.DE,,Market Price,2016-09-29T04:00:00.000000000Z,+2,Intraday 1Hour,,,,,

ALVG.DE,,Market Price,2016-09-29T05:00:00.000000000Z,+2,Intraday 1Hour,,,,,

ALVG.DE,,Market Price,2016-09-29T06:00:00.000000000Z,+2,Intraday 1Hour,,,,,

ALVG.DE,,Market Price,2016-09-29T07:00:00.000000000Z,+2,Intraday 1Hour,132.9,133.5,132.8,133.25,186918

ALVG.DE,,Market Price,2016-09-29T08:00:00.000000000Z,+2,Intraday 1Hour,133.3,133.7,132.7,132.85,104396

ALVG.DE,,Market Price,2016-09-29T09:00:00.000000000Z,+2,Intraday 1Hour,132.85,133.15,132.7,132.85,123768

ALVG.DE,,Market Price,2016-09-29T10:00:00.000000000Z,+2,Intraday 1Hour,132.85,132.9,132.7,132.75,76545

ALVG.DE,,Market Price,2016-09-29T11:00:00.000000000Z,+2,Intraday 1Hour,132.8,133.3,132.75,133.15,49522

ALVG.DE,,Market Price,2016-09-29T12:00:00.000000000Z,+2,Intraday 1Hour,133.15,133.2,132.5,132.7,116571

ALVG.DE,,Market Price,2016-09-29T13:00:00.000000000Z,+2,Intraday 1Hour,132.75,132.9,132.45,132.55,75991

ALVG.DE,,Market Price,2016-09-29T14:00:00.000000000Z,+2,Intraday 1Hour,132.55,132.85,131.35,131.45,156691

ALVG.DE,,Market Price,2016-09-29T15:00:00.000000000Z,+2,Intraday 1Hour,131.4,131.45,130.6,131.3,421795

ALVG.DE,,Market Price,2016-09-29T16:00:00.000000000Z,+2,Intraday 1Hour,,,,,

ALVG.DE,,Market Price,2016-09-29T17:00:00.000000000Z,+2,Intraday 1Hour,,,,,

ALVG.DE,,Market Price,2016-09-29T18:00:00.000000000Z,+2,Intraday 1Hour,,,,,

ALVG.DE,,Market Price,2016-09-29T19:00:00.000000000Z,+2,Intraday 1Hour,,,,,

ALVG.DE,,Market Price,2016-09-29T20:00:00.000000000Z,+2,Intraday 1Hour,,,,,

ALVG.DE,,Market Price,2016-09-29T21:00:00.000000000Z,+2,Intraday 1Hour,,,,,

ALVG.DE,,Market Price,2016-09-29T22:00:00.000000000Z,+2,Intraday 1Hour,,,,,

ALVG.DE,,Market Price,2016-09-29T23:00:00.000000000Z,+2,Intraday 1Hour,,,,,

IBM.N,,Market Price,2016-09-29T00:00:00.000000000Z,-4,Intraday 1Hour,,,,,

IBM.N,,Market Price,2016-09-29T01:00:00.000000000Z,-4,Intraday 1Hour,,,,,

IBM.N,,Market Price,2016-09-29T02:00:00.000000000Z,-4,Intraday 1Hour,,,,,

IBM.N,,Market Price,2016-09-29T03:00:00.000000000Z,-4,Intraday 1Hour,,,,,

IBM.N,,Market Price,2016-09-29T04:00:00.000000000Z,-4,Intraday 1Hour,,,,,

IBM.N,,Market Price,2016-09-29T05:00:00.000000000Z,-4,Intraday 1Hour,,,,,

IBM.N,,Market Price,2016-09-29T06:00:00.000000000Z,-4,Intraday 1Hour,,,,,

IBM.N,,Market Price,2016-09-29T07:00:00.000000000Z,-4,Intraday 1Hour,,,,,

IBM.N,,Market Price,2016-09-29T08:00:00.000000000Z,-4,Intraday 1Hour,,,,,

IBM.N,,Market Price,2016-09-29T09:00:00.000000000Z,-4,Intraday 1Hour,,,,,

IBM.N,,Market Price,2016-09-29T10:00:00.000000000Z,-4,Intraday 1Hour,,,,,

IBM.N,,Market Price,2016-09-29T11:00:00.000000000Z,-4,Intraday 1Hour,,,,,

IBM.N,,Market Price,2016-09-29T12:00:00.000000000Z,-4,Intraday 1Hour,,,,,

IBM.N,,Market Price,2016-09-29T13:00:00.000000000Z,-4,Intraday 1Hour,158.55,160.52,158.55,159.53,194717

IBM.N,,Market Price,2016-09-29T14:00:00.000000000Z,-4,Intraday 1Hour,159.57,159.91,159.03,159.14,93953

IBM.N,,Market Price,2016-09-29T15:00:00.000000000Z,-4,Intraday 1Hour,159.14,159.8,158.6,159.28,107389

IBM.N,,Market Price,2016-09-29T16:00:00.000000000Z,-4,Intraday 1Hour,159.23,159.67,157.93,158.16,75752

IBM.N,,Market Price,2016-09-29T17:00:00.000000000Z,-4,Intraday 1Hour,158.24,158.37,157.49,157.68,70565

IBM.N,,Market Price,2016-09-29T18:00:00.000000000Z,-4,Intraday 1Hour,157.71,158.72,157.7,158.36,44732

IBM.N,,Market Price,2016-09-29T19:00:00.000000000Z,-4,Intraday 1Hour,158.33,158.61,158.04,158.14,114186

IBM.N,,Market Price,2016-09-29T20:00:00.000000000Z,-4,Intraday 1Hour,158.11,158.11,158.11,158.11,370188

IBM.N,,Market Price,2016-09-29T21:00:00.000000000Z,-4,Intraday 1Hour,,,,,

IBM.N,,Market Price,2016-09-29T22:00:00.000000000Z,-4,Intraday 1Hour,,,,,

IBM.N,,Market Price,2016-09-29T23:00:00.000000000Z,-4,Intraday 1Hour,,,,,

Total line count for data read from disk: 73

===============================================================================

Press Enter to continue

Read data from DSS, decompress contents on the fly, save to csv and display:

==================================== DATA =====================================

#RIC,Alias Underlying RIC,Domain,Date-Time,GMT Offset,Type,Open,High,Low,Last,Volume

0001.HK,,Market Price,2016-09-29T00:00:00.000000000Z,+8,Intraday 1Hour,,,,,

0001.HK,,Market Price,2016-09-29T01:00:00.000000000Z,+8,Intraday 1Hour,99.4,100.2,99.4,99.75,444500

0001.HK,,Market Price,2016-09-29T02:00:00.000000000Z,+8,Intraday 1Hour,99.7,99.9,99.4,99.45,449500

0001.HK,,Market Price,2016-09-29T03:00:00.000000000Z,+8,Intraday 1Hour,99.45,99.65,99.35,99.6,371000

0001.HK,,Market Price,2016-09-29T04:00:00.000000000Z,+8,Intraday 1Hour,,,,,

0001.HK,,Market Price,2016-09-29T05:00:00.000000000Z,+8,Intraday 1Hour,99.5,99.6,99.25,99.25,280500

0001.HK,,Market Price,2016-09-29T06:00:00.000000000Z,+8,Intraday 1Hour,99.3,99.5,99.3,99.4,204000

0001.HK,,Market Price,2016-09-29T07:00:00.000000000Z,+8,Intraday 1Hour,99.4,99.85,99.4,99.85,1448500

0001.HK,,Market Price,2016-09-29T08:00:00.000000000Z,+8,Intraday 1Hour,99.7,99.7,99.7,99.7,506500

0001.HK,,Market Price,2016-09-29T09:00:00.000000000Z,+8,Intraday 1Hour,,,,,

0001.HK,,Market Price,2016-09-29T10:00:00.000000000Z,+8,Intraday 1Hour,,,,,

0001.HK,,Market Price,2016-09-29T11:00:00.000000000Z,+8,Intraday 1Hour,,,,,

0001.HK,,Market Price,2016-09-29T12:00:00.000000000Z,+8,Intraday 1Hour,,,,,

0001.HK,,Market Price,2016-09-29T13:00:00.000000000Z,+8,Intraday 1Hour,,,,,

0001.HK,,Market Price,2016-09-29T14:00:00.000000000Z,+8,Intraday 1Hour,,,,,

0001.HK,,Market Price,2016-09-29T15:00:00.000000000Z,+8,Intraday 1Hour,,,,,

0001.HK,,Market Price,2016-09-29T16:00:00.000000000Z,+8,Intraday 1Hour,,,,,

0001.HK,,Market Price,2016-09-29T17:00:00.000000000Z,+8,Intraday 1Hour,,,,,

0001.HK,,Market Price,2016-09-29T18:00:00.000000000Z,+8,Intraday 1Hour,,,,,

0001.HK,,Market Price,2016-09-29T19:00:00.000000000Z,+8,Intraday 1Hour,,,,,

0001.HK,,Market Price,2016-09-29T20:00:00.000000000Z,+8,Intraday 1Hour,,,,,

0001.HK,,Market Price,2016-09-29T21:00:00.000000000Z,+8,Intraday 1Hour,,,,,

0001.HK,,Market Price,2016-09-29T22:00:00.000000000Z,+8,Intraday 1Hour,,,,,

0001.HK,,Market Price,2016-09-29T23:00:00.000000000Z,+8,Intraday 1Hour,,,,,

ALVG.DE,,Market Price,2016-09-29T00:00:00.000000000Z,+2,Intraday 1Hour,,,,,

ALVG.DE,,Market Price,2016-09-29T01:00:00.000000000Z,+2,Intraday 1Hour,,,,,

ALVG.DE,,Market Price,2016-09-29T02:00:00.000000000Z,+2,Intraday 1Hour,,,,,

ALVG.DE,,Market Price,2016-09-29T03:00:00.000000000Z,+2,Intraday 1Hour,,,,,

ALVG.DE,,Market Price,2016-09-29T04:00:00.000000000Z,+2,Intraday 1Hour,,,,,

ALVG.DE,,Market Price,2016-09-29T05:00:00.000000000Z,+2,Intraday 1Hour,,,,,

ALVG.DE,,Market Price,2016-09-29T06:00:00.000000000Z,+2,Intraday 1Hour,,,,,

ALVG.DE,,Market Price,2016-09-29T07:00:00.000000000Z,+2,Intraday 1Hour,132.9,133.5,132.8,133.25,186918

ALVG.DE,,Market Price,2016-09-29T08:00:00.000000000Z,+2,Intraday 1Hour,133.3,133.7,132.7,132.85,104396

ALVG.DE,,Market Price,2016-09-29T09:00:00.000000000Z,+2,Intraday 1Hour,132.85,133.15,132.7,132.85,123768

ALVG.DE,,Market Price,2016-09-29T10:00:00.000000000Z,+2,Intraday 1Hour,132.85,132.9,132.7,132.75,76545

ALVG.DE,,Market Price,2016-09-29T11:00:00.000000000Z,+2,Intraday 1Hour,132.8,133.3,132.75,133.15,49522

ALVG.DE,,Market Price,2016-09-29T12:00:00.000000000Z,+2,Intraday 1Hour,133.15,133.2,132.5,132.7,116571

ALVG.DE,,Market Price,2016-09-29T13:00:00.000000000Z,+2,Intraday 1Hour,132.75,132.9,132.45,132.55,75991

ALVG.DE,,Market Price,2016-09-29T14:00:00.000000000Z,+2,Intraday 1Hour,132.55,132.85,131.35,131.45,156691

ALVG.DE,,Market Price,2016-09-29T15:00:00.000000000Z,+2,Intraday 1Hour,131.4,131.45,130.6,131.3,421795

ALVG.DE,,Market Price,2016-09-29T16:00:00.000000000Z,+2,Intraday 1Hour,,,,,

ALVG.DE,,Market Price,2016-09-29T17:00:00.000000000Z,+2,Intraday 1Hour,,,,,

ALVG.DE,,Market Price,2016-09-29T18:00:00.000000000Z,+2,Intraday 1Hour,,,,,

ALVG.DE,,Market Price,2016-09-29T19:00:00.000000000Z,+2,Intraday 1Hour,,,,,

ALVG.DE,,Market Price,2016-09-29T20:00:00.000000000Z,+2,Intraday 1Hour,,,,,

ALVG.DE,,Market Price,2016-09-29T21:00:00.000000000Z,+2,Intraday 1Hour,,,,,

ALVG.DE,,Market Price,2016-09-29T22:00:00.000000000Z,+2,Intraday 1Hour,,,,,

ALVG.DE,,Market Price,2016-09-29T23:00:00.000000000Z,+2,Intraday 1Hour,,,,,

IBM.N,,Market Price,2016-09-29T00:00:00.000000000Z,-4,Intraday 1Hour,,,,,

IBM.N,,Market Price,2016-09-29T01:00:00.000000000Z,-4,Intraday 1Hour,,,,,

IBM.N,,Market Price,2016-09-29T02:00:00.000000000Z,-4,Intraday 1Hour,,,,,

IBM.N,,Market Price,2016-09-29T03:00:00.000000000Z,-4,Intraday 1Hour,,,,,

IBM.N,,Market Price,2016-09-29T04:00:00.000000000Z,-4,Intraday 1Hour,,,,,

IBM.N,,Market Price,2016-09-29T05:00:00.000000000Z,-4,Intraday 1Hour,,,,,

IBM.N,,Market Price,2016-09-29T06:00:00.000000000Z,-4,Intraday 1Hour,,,,,

IBM.N,,Market Price,2016-09-29T07:00:00.000000000Z,-4,Intraday 1Hour,,,,,

IBM.N,,Market Price,2016-09-29T08:00:00.000000000Z,-4,Intraday 1Hour,,,,,

IBM.N,,Market Price,2016-09-29T09:00:00.000000000Z,-4,Intraday 1Hour,,,,,

IBM.N,,Market Price,2016-09-29T10:00:00.000000000Z,-4,Intraday 1Hour,,,,,

IBM.N,,Market Price,2016-09-29T11:00:00.000000000Z,-4,Intraday 1Hour,,,,,

IBM.N,,Market Price,2016-09-29T12:00:00.000000000Z,-4,Intraday 1Hour,,,,,

IBM.N,,Market Price,2016-09-29T13:00:00.000000000Z,-4,Intraday 1Hour,158.55,160.52,158.55,159.53,194717

IBM.N,,Market Price,2016-09-29T14:00:00.000000000Z,-4,Intraday 1Hour,159.57,159.91,159.03,159.14,93953

IBM.N,,Market Price,2016-09-29T15:00:00.000000000Z,-4,Intraday 1Hour,159.14,159.8,158.6,159.28,107389

IBM.N,,Market Price,2016-09-29T16:00:00.000000000Z,-4,Intraday 1Hour,159.23,159.67,157.93,158.16,75752

IBM.N,,Market Price,2016-09-29T17:00:00.000000000Z,-4,Intraday 1Hour,158.24,158.37,157.49,157.68,70565

IBM.N,,Market Price,2016-09-29T18:00:00.000000000Z,-4,Intraday 1Hour,157.71,158.72,157.7,158.36,44732

IBM.N,,Market Price,2016-09-29T19:00:00.000000000Z,-4,Intraday 1Hour,158.33,158.61,158.04,158.14,114186

IBM.N,,Market Price,2016-09-29T20:00:00.000000000Z,-4,Intraday 1Hour,158.11,158.11,158.11,158.11,370188

IBM.N,,Market Price,2016-09-29T21:00:00.000000000Z,-4,Intraday 1Hour,,,,,

IBM.N,,Market Price,2016-09-29T22:00:00.000000000Z,-4,Intraday 1Hour,,,,,

IBM.N,,Market Price,2016-09-29T23:00:00.000000000Z,-4,Intraday 1Hour,,,,,

Total line count for data read on the fly: 73

Saved the uncompressed data file to disk:

C:\API TH REST\TH_API_tutorial_5l_data2.csv

===============================================================================

Press Enter to continue

==================================== NOTES ====================================

Extraction Services Version 14.5.42294 (737b0965c07f), Built Apr 8 2021 13:43:46

User ID: 9008895

Extraction ID: 2000000250550084

Schedule: 0x07867ca739ec78bc (ID = 0x0000000000000000)

Input List (10 items): (ID = 0x07867ca739ec78bc) Created: 04/21/2021 10:09:26 Last Modified: 04/21/2021 10:09:26

Report Template (5 fields): _OnD_0x07867ca739ec78bc (ID = 0x07867ca73a0c78bc) Created: 04/21/2021 10:06:48 Last Modifi

ed: 04/21/2021 10:06:48

Schedule dispatched via message queue (0x07867ca739ec78bc), Data source identifier (14E126701F7D4400920930553FDF8559)

Schedule Time: 04/21/2021 10:06:50

Processing started at 04/21/2021 10:06:50

Processing completed successfully at 04/21/2021 10:09:26

Extraction finished at 04/21/2021 10:09:26 UTC, with servers: tm02n02

Instrument <CSP,911760HW9> expanded to 0 RICS.

Instrument <CSP,79548KHM3> expanded to 0 RICS.

Instrument <CSP,194196BL4> expanded to 0 RICS.

Instrument <CSP,911760JQ0> expanded to 0 RICS.

Instrument <CSP,31282YAC3> expanded to 0 RICS.

Instrument <CSP,31352DCR9> expanded to 0 RICS.

Instrument <CSP,31352JRV1> expanded to 0 RICS.

Instrument <RIC,ALVG.DE> expanded to 1 RIC: ALVG.DE.

Instrument <RIC,IBM.N> expanded to 1 RIC: IBM.N.

Instrument <RIC,0001.HK> expanded to 1 RIC: 0001.HK.

Total instruments after instrument expansion = 3

Quota Message: INFO: Tick History Cash Quota Count Before Extraction: 3190; Instruments Approved for Extraction: 1; Ti

ck History Cash Quota Count After Extraction: 3190, 638% of Limit; Tick History Cash Quota Limit: 500

Quota Message: ERROR: The RIC '0001.HK' in the request would exceed your quota limits. Adjust your input list to conti

nue.

Quota Message: ERROR: The RIC 'ALVG.DE' in the request would exceed your quota limits. Adjust your input list to conti

nue.

Quota Message: WARNING: Tick History Cash Quota has been reached or exceeded

Quota Message: Note: Quota has exceeded, however, it is not being enforced at this time but you can still make your ex

tractions and instruments are still being counted. Please contact your Account Manager for questions.

Manifest: #RIC,Domain,Start,End,Status,Count

Manifest: 0001.HK,Market Price,2016-09-29T00:00:00.000000000Z,2016-09-29T23:00:00.000000000Z,Active,24

Manifest: ALVG.DE,Market Price,2016-09-29T00:00:00.000000000Z,2016-09-29T23:00:00.000000000Z,Active,24

Manifest: IBM.N,Market Price,2016-09-29T00:00:00.000000000Z,2016-09-29T23:00:00.000000000Z,Active,24

===============================================================================

Press Enter to continue

SUCCESS: processing completed successfully.

ERROR messages:

Quota Message: ERROR: The RIC '0001.HK' in the request would exceed your quota limits. Adjust your input list to conti

nue.

Quota Message: ERROR: The RIC 'ALVG.DE' in the request would exceed your quota limits. Adjust your input list to conti

nue.

WARNING messages:

Quota Message: WARNING: Tick History Cash Quota has been reached or exceeded

===============================================================================

Press Enter to continue

Intermediary results are discussed at length in the code explanations in the previous section of this tutorial.

Press Enter one final time to close the pop-up and end the program.

We find the output files here; their contents reflect what was displayed on screen:

Potential errors and solutions

If the user name and password were not set properly, an error will be returned. See Tutorial 1 for details.

Conclusions

This tutorial introduced the simplified high level calls for On Demand extraction requests.

It showed how instrument identifiers can be read from a CSV file, and how to log errors in the process.

It demonstrated how to set instrument validation options.

It also showed how extracted data could be written to file.